Perhaps I shouldn't try to make resolutions: I resolved to blog book notes till the end of the year, and instead I'm writing something about estimation.

A power law is a relationship of the form $y = \gamma_0 x^{\gamma_1}$ and can be linearized for estimation using OLS (with a very stretchy assumption on stochastic disturbances, but let's not quibble) into

$\log(y) = \beta_0 + \beta_1 \log(x) +\epsilon$,

from which the original parameters can be trivially recovered:

$\hat\gamma_0 = \exp(\hat\beta_0)$ and $\hat\gamma_1 = \hat\beta_1$.

Power laws are plentiful in Nature, especially when one includes the degree distribution of social networks in a – generous and uncommon, I admit it – definition of Nature. An usually proposed source of power law degree distribution is preferential attachment in network formation: the probability of a new node $i$ being connected to an old node $j$ is an increasing function of the degree of $j$.

The problem with power laws in the wild is that they are really hard to estimate precisely, and I got very annoyed at the glibness of some articles, which report estimation of power laws in highly dequantized manner: they don't actually show the estimates or their descriptive statistics, only charts with no error bars.

Here's my problem: it's well-known that even small stochastic disturbances can make parameter identification in power law data very difficult. And yet, that is never mentioned in those papers. This omission, coupled with the lack of actual estimates and their descriptive statistics, is unforgivable. And suspicious.

Perhaps this needs a couple of numerical examples to clarify; as they say at the end of each season of television shows now:

– To be continued –

Non-work posts by Jose Camoes Silva; repurposed in May 2019 as a blog mostly about innumeracy and related matters, though not exclusively.

Wednesday, December 21, 2011

Tuesday, December 20, 2011

Marginalia: Writing in one's books

I've done it for a long time now, shocking behavior though it is to some of my family and friends.

WHY I make notes

Some of my family members and friends are shocked that I write in my books. The reasons to keep the books in pristine condition vary from maintaining resale value (not an issue for me, as I don't think of books as transient presences in my life) to keeping the integrity of the author's work. Obviously, if I had a first edition of Newton's Principia, I wouldn't write on in; the books I write on are workaday copies, many of them cheap paperbacks or technical books.

The reason why I makes notes is threefold:

To better understand the book as I read it. Actively reading a book, especially a non-fiction or work book, is essentially a dialog between the book and the knowledge I can access, both in my mind and in outside references. Deciding what is important enough to highlight and what points deserve further elaboration in the form of commentary or an example that I furnish, makes reading a much more immersive experience than simply processing the words.

To collect my ideas from several readings (I read many books more than once) into a place where they are not lost. Sometimes points from a previous reading are more clarifying to me than the text itself, sometimes I disagree vehemently with what I wrote before.

To refer to later when I need to find something in the book. This is particularly important in books that I read for work, in particular for technical books where many of the details have been left out (for space reasons) but I added notes that fill those in for the parts I care about.

WHAT types of notes I make

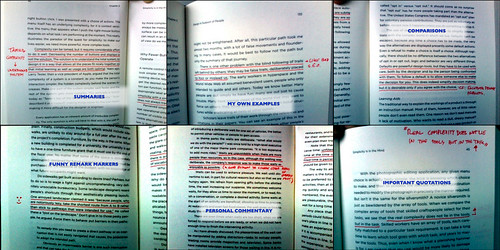

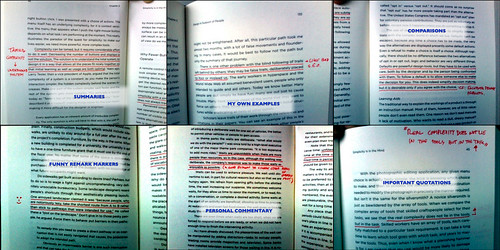

In an earlier post about marginalia on my personal blog I included this image (click for bigger),

showing some notes I made while reading the book Living With Complexity, by Donald Norman. These notes fell into six cases:

Summaries of the arguments in text. Often texts will take long circuitous routes to get to the point. (Norman's book is not one of these.) I tend to write quick summaries, usually in implication form like the one above, that cut down the entropy.

My examples to complement the text. Sometimes I happen to know better examples, or examples that I prefer, than those in the book; in that case I tend to note them in the book so that the example is always connected to the context in which I thought of it. This is particularly useful in work books (and papers, of course) when I turn them into teaching or executive education materials.

Comparisons with external materials. In this case I make a note to compare Norman's point about default choices with the problems Facebook faced in similar matters regarding its privacy.

Notable passages. Marking funny passages with smiley faces and surprising passages with an exclamation point helps find these when browsing the book quickly. Occasionally I also mark passages for style or felicitous turn of phrase, typically with "nice!" on the margin.

Personal commentary. Sometimes the text provokes some reaction that I think is work recording in the book. I don't write review-like commentary in books as a general rule, but I might note something about missing or hidden assumptions, innumeracy, biases, statistical issues; I might also comment positively on an idea, for example, that I had never thought of except for the text.

Quotable passages. These are self-explanatory and particularly easy to make on eBooks. Here's one from George Orwell's Homage To Catalonia:

A few other types of marginalia that I have used in other books:

Proofs and analysis to complement what's in the text. As an example, in a PNAS paper on predictions based on search, the authors call $\log(y) = \beta_0 + \beta_1 \log(x)$ a linear model, with the logarithms used to account for the skewness of the variables. I inserted a note that this is clearly a power law relationship, not a linear relationship, with the two steps of algebra that show $y = e^{\beta_0} \times x^{\beta_1}$, in case I happen to be distracted when I reread this paper and can't think through the baby math.

Adding missing references or checking the references (which sometime are incorrect, in which case I correct them). Yep, I'm an academic nerd at heart; but these are important, like a chain of custody for evidence or the provenance records for a work of art.

Diagrams clarifying complicated points. I do this in part because I like visual thinking and in part because if I ever need to present the material to an audience I'll have a starting point for visual support design.

Data that complements the text. Sometimes the text is dequantized and refers to a story for which data is available. I find that adding the data to the story helps me get a better perspective and also if I ever want to use the story I'll have the data there to make a better case.

Counter-arguments. Sometimes I disagree with the text, or at least with the lack of feasible counter-arguments (even when I agree with a position I don't like that the author presents the opposing points of view only in strawman form), so I write the counter-arguments in order to remind me that they exist and the presentation in the text doesn't do them justice.

Markers for things that I want to get. For example, while reading Ted Gioia's The History of Jazz, I marked several recordings that he mentions for acquisition; when reading technical papers I tend to mark the references I want to check; when reading reviews I tend to add things to wishlists (though I also prune these wishlists often).

HOW to make notes

A few practical points for writing marginalia:

Highlighters are not good for long-term notes. They either darken significantly, making it hard to read the highlighted text, or they fade, losing the highlight. I prefer underlining with a high contrast color for short sentences or segments or marking beginning and end of passages on the margin.

Margins are not the only place. I add free-standing inserts, usually in the form of large Post-Its or pieces of paper. Important management tip: write the page number the note refers to on the note.

Transcribing important notes to a searchable format (a text file on my laptop) makes it easy to find stuff later. This is one of the advantages of eBooks of the various types (Kindle, iBook, O'Reilly PDFs), making it easy to search notes and highlights.

Keeping a commonplace book of felicitous turns of phrase (the ones in the books and the ones I come up with) either in a file or on an old-style paper journal helps me become a better writer.

-- -- -- --

Note: This blog may become a little more varied in topics as I decided to write posts more often to practice writing for a general audience. After all, the best way to become a better writer is to write and let others see it. (No comments on the blog, but plenty of ones by email from people I know.)

WHY I make notes

Some of my family members and friends are shocked that I write in my books. The reasons to keep the books in pristine condition vary from maintaining resale value (not an issue for me, as I don't think of books as transient presences in my life) to keeping the integrity of the author's work. Obviously, if I had a first edition of Newton's Principia, I wouldn't write on in; the books I write on are workaday copies, many of them cheap paperbacks or technical books.

The reason why I makes notes is threefold:

To better understand the book as I read it. Actively reading a book, especially a non-fiction or work book, is essentially a dialog between the book and the knowledge I can access, both in my mind and in outside references. Deciding what is important enough to highlight and what points deserve further elaboration in the form of commentary or an example that I furnish, makes reading a much more immersive experience than simply processing the words.

To collect my ideas from several readings (I read many books more than once) into a place where they are not lost. Sometimes points from a previous reading are more clarifying to me than the text itself, sometimes I disagree vehemently with what I wrote before.

To refer to later when I need to find something in the book. This is particularly important in books that I read for work, in particular for technical books where many of the details have been left out (for space reasons) but I added notes that fill those in for the parts I care about.

WHAT types of notes I make

In an earlier post about marginalia on my personal blog I included this image (click for bigger),

showing some notes I made while reading the book Living With Complexity, by Donald Norman. These notes fell into six cases:

Summaries of the arguments in text. Often texts will take long circuitous routes to get to the point. (Norman's book is not one of these.) I tend to write quick summaries, usually in implication form like the one above, that cut down the entropy.

My examples to complement the text. Sometimes I happen to know better examples, or examples that I prefer, than those in the book; in that case I tend to note them in the book so that the example is always connected to the context in which I thought of it. This is particularly useful in work books (and papers, of course) when I turn them into teaching or executive education materials.

Comparisons with external materials. In this case I make a note to compare Norman's point about default choices with the problems Facebook faced in similar matters regarding its privacy.

Notable passages. Marking funny passages with smiley faces and surprising passages with an exclamation point helps find these when browsing the book quickly. Occasionally I also mark passages for style or felicitous turn of phrase, typically with "nice!" on the margin.

Personal commentary. Sometimes the text provokes some reaction that I think is work recording in the book. I don't write review-like commentary in books as a general rule, but I might note something about missing or hidden assumptions, innumeracy, biases, statistical issues; I might also comment positively on an idea, for example, that I had never thought of except for the text.

Quotable passages. These are self-explanatory and particularly easy to make on eBooks. Here's one from George Orwell's Homage To Catalonia:

The constant come-and-go of troops had reduced the village to a state of unspeakable filth. It did not possess and never had possessed such a thing as a lavatory or a drain of any kind, and there was not a square yard anywhere where you could tread without watching your step. (Chapter 2.)

A few other types of marginalia that I have used in other books:

Proofs and analysis to complement what's in the text. As an example, in a PNAS paper on predictions based on search, the authors call $\log(y) = \beta_0 + \beta_1 \log(x)$ a linear model, with the logarithms used to account for the skewness of the variables. I inserted a note that this is clearly a power law relationship, not a linear relationship, with the two steps of algebra that show $y = e^{\beta_0} \times x^{\beta_1}$, in case I happen to be distracted when I reread this paper and can't think through the baby math.

Adding missing references or checking the references (which sometime are incorrect, in which case I correct them). Yep, I'm an academic nerd at heart; but these are important, like a chain of custody for evidence or the provenance records for a work of art.

Diagrams clarifying complicated points. I do this in part because I like visual thinking and in part because if I ever need to present the material to an audience I'll have a starting point for visual support design.

Data that complements the text. Sometimes the text is dequantized and refers to a story for which data is available. I find that adding the data to the story helps me get a better perspective and also if I ever want to use the story I'll have the data there to make a better case.

Counter-arguments. Sometimes I disagree with the text, or at least with the lack of feasible counter-arguments (even when I agree with a position I don't like that the author presents the opposing points of view only in strawman form), so I write the counter-arguments in order to remind me that they exist and the presentation in the text doesn't do them justice.

Markers for things that I want to get. For example, while reading Ted Gioia's The History of Jazz, I marked several recordings that he mentions for acquisition; when reading technical papers I tend to mark the references I want to check; when reading reviews I tend to add things to wishlists (though I also prune these wishlists often).

HOW to make notes

A few practical points for writing marginalia:

Highlighters are not good for long-term notes. They either darken significantly, making it hard to read the highlighted text, or they fade, losing the highlight. I prefer underlining with a high contrast color for short sentences or segments or marking beginning and end of passages on the margin.

Margins are not the only place. I add free-standing inserts, usually in the form of large Post-Its or pieces of paper. Important management tip: write the page number the note refers to on the note.

Transcribing important notes to a searchable format (a text file on my laptop) makes it easy to find stuff later. This is one of the advantages of eBooks of the various types (Kindle, iBook, O'Reilly PDFs), making it easy to search notes and highlights.

Keeping a commonplace book of felicitous turns of phrase (the ones in the books and the ones I come up with) either in a file or on an old-style paper journal helps me become a better writer.

-- -- -- --

Note: This blog may become a little more varied in topics as I decided to write posts more often to practice writing for a general audience. After all, the best way to become a better writer is to write and let others see it. (No comments on the blog, but plenty of ones by email from people I know.)

Monday, December 12, 2011

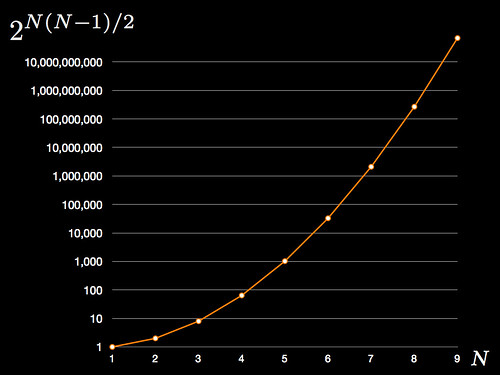

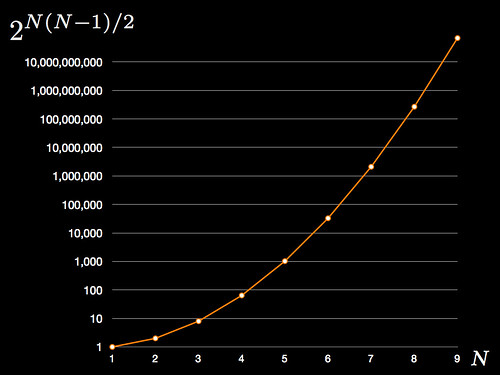

How many possible topologies can a N-node network have?

Short answer, for an undirected network: $2^{N(N-1)/2}$.

Essentially the number of edges is $N(N-1)/2$ so the number of possible topologies is two raised to the number of edges, capturing every possible case where an edge can either be present or absent. For a directed network the number of edges is twice that of those in an undirected network so the number of possible topologies is the square (or just remove the $/2$ part from the formula above).

To show how quickly things get out of control, here are some numbers:

$N=1 \Rightarrow 1$ topology

$N=2 \Rightarrow 2$ topologies

$N=3 \Rightarrow 8$ topologies

$N=4 \Rightarrow 64$ topologies

$N=5 \Rightarrow 1024$ topologies

$N=6 \Rightarrow 32,768$ topologies

$N=7 \Rightarrow 2,097,152$ topologies

$N=8 \Rightarrow 268,435,456$ topologies

$N=9 \Rightarrow 68,719,476,736$ topologies

$N=10 \Rightarrow 35,184,372,088,832$ topologies

$N=20 \Rightarrow 1.5693 \times 10^{57}$ topologies

$N=30 \Rightarrow 8.8725 \times 10^{130}$ topologies

$N=40 \Rightarrow 6.3591 \times 10^{234}$ topologies

$N=50 \Rightarrow 5.7776 \times 10^{368}$ topologies

This is the reason why any serious analysis of a network requires the use of mathematical modeling and computer processing: our human brains are not equipped to deal with this kind of exploding complexity.

And for the visual learners, here's a graph denoting the pointlessness of trying to grasp network topologies "by hand" (note logarithmic vertical scale):

Essentially the number of edges is $N(N-1)/2$ so the number of possible topologies is two raised to the number of edges, capturing every possible case where an edge can either be present or absent. For a directed network the number of edges is twice that of those in an undirected network so the number of possible topologies is the square (or just remove the $/2$ part from the formula above).

To show how quickly things get out of control, here are some numbers:

$N=1 \Rightarrow 1$ topology

$N=2 \Rightarrow 2$ topologies

$N=3 \Rightarrow 8$ topologies

$N=4 \Rightarrow 64$ topologies

$N=5 \Rightarrow 1024$ topologies

$N=6 \Rightarrow 32,768$ topologies

$N=7 \Rightarrow 2,097,152$ topologies

$N=8 \Rightarrow 268,435,456$ topologies

$N=9 \Rightarrow 68,719,476,736$ topologies

$N=10 \Rightarrow 35,184,372,088,832$ topologies

$N=20 \Rightarrow 1.5693 \times 10^{57}$ topologies

$N=30 \Rightarrow 8.8725 \times 10^{130}$ topologies

$N=40 \Rightarrow 6.3591 \times 10^{234}$ topologies

$N=50 \Rightarrow 5.7776 \times 10^{368}$ topologies

This is the reason why any serious analysis of a network requires the use of mathematical modeling and computer processing: our human brains are not equipped to deal with this kind of exploding complexity.

And for the visual learners, here's a graph denoting the pointlessness of trying to grasp network topologies "by hand" (note logarithmic vertical scale):

Saturday, December 3, 2011

Why I'm not a fan of "presentation training"

Because there are too many different types of presentation for any sort of abstract training to be effective. So "presentation training" ends up – at best – being "presentation software training."

Learning about information design, writing and general verbal communication, stage management and stage presence, and operation of software and tools used in presentations may help one become a better presenter. But, like in so many technical fields, all of these need some study of the foundations followed by a lot of field- and person-specific practice.

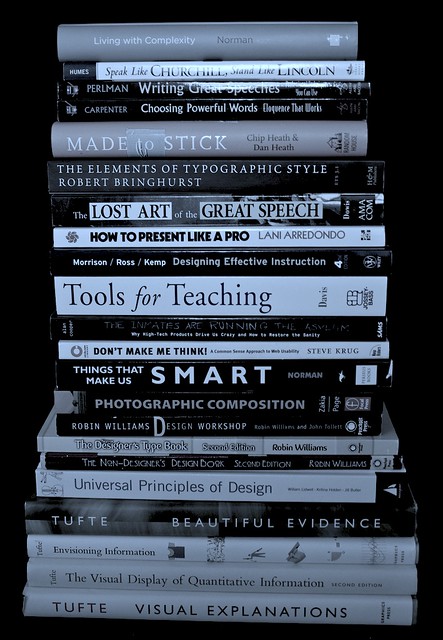

I recommend Edward Tufte's books (and seminar) for information design; Strunk and White's The Elements of Style, James Humes's Speak like Churchill, Stand like Lincoln, and William Zinsser's On Writing Well for verbal communication; and a quick read of the manual followed by exploration of the presentation software one uses. I have no recommendations regarding stage management and stage presence short of joining a theatre group, which is perhaps too much of a commitment for most presenters.

I have already written pretty much all I think about presentation preparation; the present post is about my dislike of "presentation training." To be clear, this is not about preparation for teaching or training to be an instructor. These, being specialized skills – and typically field-specific skills – are a different case.

Problem 1: Generic presentation training is unlikely to help any but the most incompetent of presenters

Since an effective presentation is one designed for its objective, within the norms of its field, targeted to its specific audience, and using the technical knowledge of its field, what use is it to learn generic rules, beyond the minimum of information design, clarity in verbal expression, and stage presence?

(My understanding from people who have attended presentation training is that there was little about information design, nothing about verbal expression, and just platitudes about stage presence.)

For someone who knows nothing about presentations and learns the basics of operating the software, presentation training may be of some use. I think Tufte made this argument: the great presenters won't be goaded into becoming "death by powerpoint" presenters just because they use the software; the terrible presenters will be forced to come up with some talking points, which may help their presentations be less disastrous. But the rest will become worse presenters by focussing on the software and some hackneyed rules – instead of the content of and the audience for the presentation.

Problem 2: Presentation trainers tend to be clueless about the needs of technical presentations

Or, the Norman Critique of the Tufte Table Argument, writ large.

The argument (which I wrote as point 1 in this post) is essentially that looking at a table, a formula, or a diagram as a presentation object – understanding its aesthetics, its information design, its use of color and type – is very different from looking at a table to make sense of the numbers therein, understand the implications of a formula to a mathematical or chemical model, and interpret the implications of the diagram for its field.

Tufte, in his attack on Powerpoint, talks about a table but focusses on its design, not how the numbers would be used, which is what prompted Donald Norman to write his critique; but, of all the people who could be said to be involved in presentation training, Tufte is actually the strongest advocate for content.

The fact remains that there's a very big difference between technical material which is used as a prop to illustrate some presentation device or technique to an audience which is mostly outside the technical field of the material and the same material being used to make a technical point to an audience of the appropriate technical field.

Presentation training, being generic, cannot give specific rules for a given field; but those rules are actually useful to anyone in the field who has questions about how to present something.

Problem 3: Presentation training actions are typically presentations (lectures), which is not an effective way to teach technical material

The best way to teach technical material is to have the students prepare by reading the foundations (or watching video on their own, allowing them to pace the delivery by their own learning speed) and preparing for a discussion or exercise applying what they learned.

This is called participant-centered learning; it's the way people learn technical material. Even in lecture courses the actual learning only happens when the students practice the material.

Almost all presentation training is done in lecture form, delivered as a presentation from the instructor with question-and-answer periods for the audience. But since the audience doesn't actually practice the material in the lecture, they may have only questions of clarification. The real questions that appear during actual practice don't come up during a lecture, and those are the questions that really need an answer.

Problem 4: Most presentation training is too narrowly bracketed

Because it's generic, presentation training misses the point of making a presentation to begin with.

After all, presentations aren't made in a vacuum: there's a purpose to the presentation (say, report market research to decision-makers), an audience with specific needs (product designers who need to understand the parameters of the consumer choice so they can tweak the product line), supporting material that may be used for further reference (a written report with the details of the research), action items and metrics for those items (follow-up research and a schedule of deliverables and budget), and other elements that depend on the presentation.

There's also the culture of the organization which hosts the presentation, disclosure and privacy issues, reliability of sources, and a host of matters apparently unrelated to a presentation that determine its success a lot more than the design of the slides.

In fact, the use of slides, or the idea of a speaker talking to an audience, is itself a constraint on the type of presentations the training is focussed on. And that trains people to think of a presentation as a lecture-style presentation. Many presentations are interactive, perhaps with the "presenter" taking the position of moderator or arbitrator; some presentations are made in roundtable fashion, as a discussion where the main presenter is one of many voices.

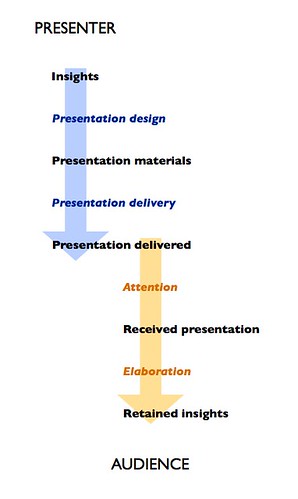

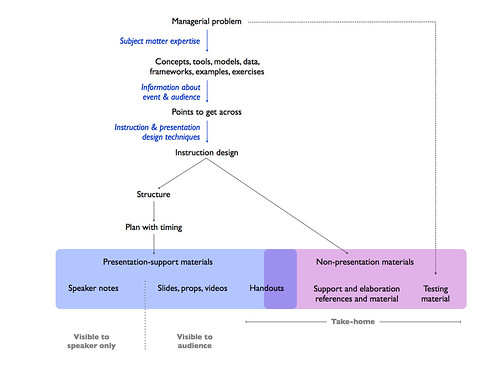

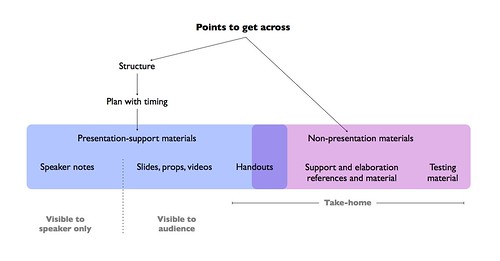

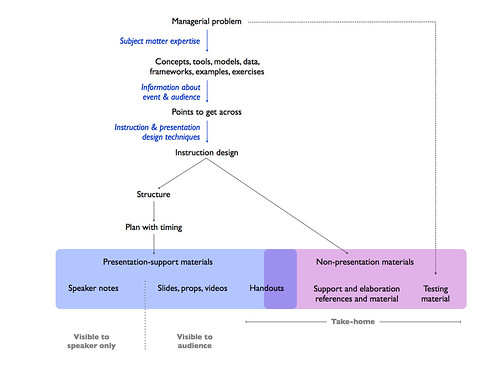

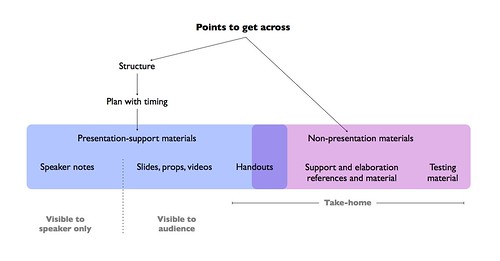

Some time ago, I summarized a broader view of a specific type of presentation event (data scientists presenting results to managers) in this diagram, illustrating why and how I thought data scientists should take more care with presentation design (click for larger):

(Note that this is specific advice for people making presentations based on data analysis to managers or decision-makers that rely on the data analysis for action, but cannot do the analysis themselves. Hence the blue rules on the right to minimize the miscommunication between the people from two different fields. This is what I mean by field-specific presentation training.)

These are four reasons why I don't like generic presentation training. Really it's just one: generic presentation training assumes that content is something secondary, and that assumption is the reason why we see so many bad presentations to begin with.

NOTE: Participant-centered learning is a general term for using the class time for discussion and exercises, not necessarily for the Harvard Case Method, which is one form of participant-centered learning.

Related posts:

Posts on presentations in my personal blog.

Posts on teaching in my personal blog.

Posts on presentations in this blog.

My 3500-word post on preparing presentations.

Learning about information design, writing and general verbal communication, stage management and stage presence, and operation of software and tools used in presentations may help one become a better presenter. But, like in so many technical fields, all of these need some study of the foundations followed by a lot of field- and person-specific practice.

I recommend Edward Tufte's books (and seminar) for information design; Strunk and White's The Elements of Style, James Humes's Speak like Churchill, Stand like Lincoln, and William Zinsser's On Writing Well for verbal communication; and a quick read of the manual followed by exploration of the presentation software one uses. I have no recommendations regarding stage management and stage presence short of joining a theatre group, which is perhaps too much of a commitment for most presenters.

I have already written pretty much all I think about presentation preparation; the present post is about my dislike of "presentation training." To be clear, this is not about preparation for teaching or training to be an instructor. These, being specialized skills – and typically field-specific skills – are a different case.

Problem 1: Generic presentation training is unlikely to help any but the most incompetent of presenters

Since an effective presentation is one designed for its objective, within the norms of its field, targeted to its specific audience, and using the technical knowledge of its field, what use is it to learn generic rules, beyond the minimum of information design, clarity in verbal expression, and stage presence?

(My understanding from people who have attended presentation training is that there was little about information design, nothing about verbal expression, and just platitudes about stage presence.)

For someone who knows nothing about presentations and learns the basics of operating the software, presentation training may be of some use. I think Tufte made this argument: the great presenters won't be goaded into becoming "death by powerpoint" presenters just because they use the software; the terrible presenters will be forced to come up with some talking points, which may help their presentations be less disastrous. But the rest will become worse presenters by focussing on the software and some hackneyed rules – instead of the content of and the audience for the presentation.

Problem 2: Presentation trainers tend to be clueless about the needs of technical presentations

Or, the Norman Critique of the Tufte Table Argument, writ large.

The argument (which I wrote as point 1 in this post) is essentially that looking at a table, a formula, or a diagram as a presentation object – understanding its aesthetics, its information design, its use of color and type – is very different from looking at a table to make sense of the numbers therein, understand the implications of a formula to a mathematical or chemical model, and interpret the implications of the diagram for its field.

Tufte, in his attack on Powerpoint, talks about a table but focusses on its design, not how the numbers would be used, which is what prompted Donald Norman to write his critique; but, of all the people who could be said to be involved in presentation training, Tufte is actually the strongest advocate for content.

The fact remains that there's a very big difference between technical material which is used as a prop to illustrate some presentation device or technique to an audience which is mostly outside the technical field of the material and the same material being used to make a technical point to an audience of the appropriate technical field.

Presentation training, being generic, cannot give specific rules for a given field; but those rules are actually useful to anyone in the field who has questions about how to present something.

Problem 3: Presentation training actions are typically presentations (lectures), which is not an effective way to teach technical material

The best way to teach technical material is to have the students prepare by reading the foundations (or watching video on their own, allowing them to pace the delivery by their own learning speed) and preparing for a discussion or exercise applying what they learned.

This is called participant-centered learning; it's the way people learn technical material. Even in lecture courses the actual learning only happens when the students practice the material.

Almost all presentation training is done in lecture form, delivered as a presentation from the instructor with question-and-answer periods for the audience. But since the audience doesn't actually practice the material in the lecture, they may have only questions of clarification. The real questions that appear during actual practice don't come up during a lecture, and those are the questions that really need an answer.

Problem 4: Most presentation training is too narrowly bracketed

Because it's generic, presentation training misses the point of making a presentation to begin with.

After all, presentations aren't made in a vacuum: there's a purpose to the presentation (say, report market research to decision-makers), an audience with specific needs (product designers who need to understand the parameters of the consumer choice so they can tweak the product line), supporting material that may be used for further reference (a written report with the details of the research), action items and metrics for those items (follow-up research and a schedule of deliverables and budget), and other elements that depend on the presentation.

There's also the culture of the organization which hosts the presentation, disclosure and privacy issues, reliability of sources, and a host of matters apparently unrelated to a presentation that determine its success a lot more than the design of the slides.

In fact, the use of slides, or the idea of a speaker talking to an audience, is itself a constraint on the type of presentations the training is focussed on. And that trains people to think of a presentation as a lecture-style presentation. Many presentations are interactive, perhaps with the "presenter" taking the position of moderator or arbitrator; some presentations are made in roundtable fashion, as a discussion where the main presenter is one of many voices.

Some time ago, I summarized a broader view of a specific type of presentation event (data scientists presenting results to managers) in this diagram, illustrating why and how I thought data scientists should take more care with presentation design (click for larger):

(Note that this is specific advice for people making presentations based on data analysis to managers or decision-makers that rely on the data analysis for action, but cannot do the analysis themselves. Hence the blue rules on the right to minimize the miscommunication between the people from two different fields. This is what I mean by field-specific presentation training.)

These are four reasons why I don't like generic presentation training. Really it's just one: generic presentation training assumes that content is something secondary, and that assumption is the reason why we see so many bad presentations to begin with.

NOTE: Participant-centered learning is a general term for using the class time for discussion and exercises, not necessarily for the Harvard Case Method, which is one form of participant-centered learning.

Related posts:

Posts on presentations in my personal blog.

Posts on teaching in my personal blog.

Posts on presentations in this blog.

My 3500-word post on preparing presentations.

Labels:

presentations,

teaching

Friday, December 2, 2011

Dilbert gets the Correlation-Causation difference wrong

This was the Dilbert comic strip for Nov. 28, 2011:

It seems to imply that even though there's a correlation between the pointy-haired boss leaving Dilbert's cubicle and receiving an anonymous email about the worst boss in the world, there's no causation.

THAT IS WRONG!

Clearly there's causation: PHB leaves Dilbert's cubicle, which causes Wally to send the anonymous email. PHB's implication that he thinks Dilbert sends the email is wrong, but that doesn't mean that the correlation he noticed isn't in this case created by a causal link between leaving Dilbert's cubicle and getting the email.

I think Edward Tufte once said that the statement "correlation is not causation" was incomplete; at least it should read "correlation is not causation, but it sure hints at some relationship that must be investigated further." Or words to that effect.

It seems to imply that even though there's a correlation between the pointy-haired boss leaving Dilbert's cubicle and receiving an anonymous email about the worst boss in the world, there's no causation.

THAT IS WRONG!

Clearly there's causation: PHB leaves Dilbert's cubicle, which causes Wally to send the anonymous email. PHB's implication that he thinks Dilbert sends the email is wrong, but that doesn't mean that the correlation he noticed isn't in this case created by a causal link between leaving Dilbert's cubicle and getting the email.

I think Edward Tufte once said that the statement "correlation is not causation" was incomplete; at least it should read "correlation is not causation, but it sure hints at some relationship that must be investigated further." Or words to that effect.

Labels:

causality,

Dilbert,

Probability,

statistics

Friday, November 25, 2011

Online Education and the Dentist vs Personal Trainer Models of Learning

I'm a little skeptical about online education. About 2/3 skeptical.

Most of the (traditional) teaching I received was squarely based on what I call the Dentist Model of Education: a [student|patient] goes into the [classroom|dentist's office] and the [instructor|dentist] does something technical to the [student|patient]. Once the professional is done, the [student|patient] goes away and [forgets the lecture|never flosses].

I learned almost nothing from that teaching. Like every other person in a technical field, I learned from studying and solving practice problems. (Rule of thumb: learning is 1% lecture, 9% study, 90% practice problems.)

A better education model, the Personal Trainer Model of Education asserts that, like in fitness training, results come from the [trainee|student] practicing the [movements|materials] himself/herself. The job of the [personal trainer|instructor] is to guide that practice and select [exercises|materials] that are appropriate to the [training|instruction] objectives.

Which is why I'm two-thirds skeptical of the goodness of online education.

Obviously there are advantages to online materials: there's low distribution cost, which allows many people to access high quality materials; there's a culture of sharing educational materials, spearheaded by some of the world's premier education institutions; there are many forums, question and answer sites and – for those willing to pay a small fee – actual online courses with instructors and tests.

Leaving aside the broad accessibility of materials, there's no getting around the 1-9-90 rule for learning. Watching Walter Lewin teaching physics may be entertaining, but without practicing, by solving problem sets, no one watching will become a physicist.

Consider the plethora of online personal training advice and assume that the aspiring trainee manages to find a trainer who knows what he/she is doing. Would this aspiring trainee get better at her fitness exercises by reading a web site and watching videos of the personal trainer exercising? And yet some people believe that they can learn computer programming by watching online lectures. (Or offline lectures, for that matter.*)

If practice is the key to success, why do so many people recognize the absurdity of the video-watching, gym-avoiding fitness trainee while at the same time assume that online lectures are the solution to technical education woes?

(Well-designed online instruction programs are much more than lectures, of course; but what most people mean by online education is not what I consider well-designed and typically is an implementation of the dentist model of education.)

The second reason why I'm skeptic (hence the two-thirds share of skepticism) is that the education system has a second component, beyond instruction: it certifies skills and knowledge. (We could debate how well it does this, but certification is one of the main functions of education institutions.)

Certification of a specific skill can be done piecemeal but complex technical fields depend on more than a student knowing the individual skills of the field; they require the ability to integrate across different sub-disciplines, to think like a member of the profession, to actually do things. That's why engineering students have engineering projects, medical students actually treat patients, etc. These are part of the certification process, which is very hard to do online or with short in-campus events, even if we remove questions of cheating from the mix.

There's enormous potential in online education, but it can only be realized by accepting that education is not like a visit to the dentist but rather like a training session at the gym. And that real, certified learning requires a lot of interaction between the education provider and the student: not something like the one-way lectures one finds online.

(This is not to say that there aren't some good online education programs, but they tend to be uncommon.)

Just like the best-equipped gym in the world will do nothing for a lazy trainee, the best online education platform in the world will do nothing for an unmotivated student. But a motivated kid with nothing but a barbell & plates can become a competitive powerlifter and a motivated kid a with a textbook will learn more than the hordes who watch online lectures while tweeting and facebooking.

The key success factor is not technology; it's the student. It always is.

ADDENDUM (Nov 27, 2011): I've received some comments to the effect that I'm just defending universities from the disruptive innovation of entrants. Perhaps, but:

Universities have several advantages over new institutions, especially when so many of these new institutions have no understanding of what technical education requires. If there was a new online way to sell hamburgers would it surprise anyone that McDs and BK were better at doing it than people who are great at online selling engineering but who never made an hamburger in their lives?

This is not to say that there isn't [vast] room to improve in both the online and offline offerings of universities. But it takes a massive dose of arrogance to assume that everything that went before (in regards to education) can be ignored because of a low cost of content distribution.

--------

* For those who never learned computer programming: you learn by writing programs and testing them. Many many many programs and many many many tests. A quick study of the basics of the language in question is necessary, but better done individually than in a lecture room. Sometimes the learning process can be jump-started by adapting other people's programs. A surefire way to not learn how to program is to listen to someone else talk about programming.

Most of the (traditional) teaching I received was squarely based on what I call the Dentist Model of Education: a [student|patient] goes into the [classroom|dentist's office] and the [instructor|dentist] does something technical to the [student|patient]. Once the professional is done, the [student|patient] goes away and [forgets the lecture|never flosses].

I learned almost nothing from that teaching. Like every other person in a technical field, I learned from studying and solving practice problems. (Rule of thumb: learning is 1% lecture, 9% study, 90% practice problems.)

A better education model, the Personal Trainer Model of Education asserts that, like in fitness training, results come from the [trainee|student] practicing the [movements|materials] himself/herself. The job of the [personal trainer|instructor] is to guide that practice and select [exercises|materials] that are appropriate to the [training|instruction] objectives.

Which is why I'm two-thirds skeptical of the goodness of online education.

Obviously there are advantages to online materials: there's low distribution cost, which allows many people to access high quality materials; there's a culture of sharing educational materials, spearheaded by some of the world's premier education institutions; there are many forums, question and answer sites and – for those willing to pay a small fee – actual online courses with instructors and tests.

Leaving aside the broad accessibility of materials, there's no getting around the 1-9-90 rule for learning. Watching Walter Lewin teaching physics may be entertaining, but without practicing, by solving problem sets, no one watching will become a physicist.

Consider the plethora of online personal training advice and assume that the aspiring trainee manages to find a trainer who knows what he/she is doing. Would this aspiring trainee get better at her fitness exercises by reading a web site and watching videos of the personal trainer exercising? And yet some people believe that they can learn computer programming by watching online lectures. (Or offline lectures, for that matter.*)

If practice is the key to success, why do so many people recognize the absurdity of the video-watching, gym-avoiding fitness trainee while at the same time assume that online lectures are the solution to technical education woes?

(Well-designed online instruction programs are much more than lectures, of course; but what most people mean by online education is not what I consider well-designed and typically is an implementation of the dentist model of education.)

The second reason why I'm skeptic (hence the two-thirds share of skepticism) is that the education system has a second component, beyond instruction: it certifies skills and knowledge. (We could debate how well it does this, but certification is one of the main functions of education institutions.)

Certification of a specific skill can be done piecemeal but complex technical fields depend on more than a student knowing the individual skills of the field; they require the ability to integrate across different sub-disciplines, to think like a member of the profession, to actually do things. That's why engineering students have engineering projects, medical students actually treat patients, etc. These are part of the certification process, which is very hard to do online or with short in-campus events, even if we remove questions of cheating from the mix.

There's enormous potential in online education, but it can only be realized by accepting that education is not like a visit to the dentist but rather like a training session at the gym. And that real, certified learning requires a lot of interaction between the education provider and the student: not something like the one-way lectures one finds online.

(This is not to say that there aren't some good online education programs, but they tend to be uncommon.)

Just like the best-equipped gym in the world will do nothing for a lazy trainee, the best online education platform in the world will do nothing for an unmotivated student. But a motivated kid with nothing but a barbell & plates can become a competitive powerlifter and a motivated kid a with a textbook will learn more than the hordes who watch online lectures while tweeting and facebooking.

The key success factor is not technology; it's the student. It always is.

ADDENDUM (Nov 27, 2011): I've received some comments to the effect that I'm just defending universities from the disruptive innovation of entrants. Perhaps, but:

Universities have several advantages over new institutions, especially when so many of these new institutions have no understanding of what technical education requires. If there was a new online way to sell hamburgers would it surprise anyone that McDs and BK were better at doing it than people who are great at online selling engineering but who never made an hamburger in their lives?

This is not to say that there isn't [vast] room to improve in both the online and offline offerings of universities. But it takes a massive dose of arrogance to assume that everything that went before (in regards to education) can be ignored because of a low cost of content distribution.

--------

* For those who never learned computer programming: you learn by writing programs and testing them. Many many many programs and many many many tests. A quick study of the basics of the language in question is necessary, but better done individually than in a lecture room. Sometimes the learning process can be jump-started by adapting other people's programs. A surefire way to not learn how to program is to listen to someone else talk about programming.

Labels:

education

Thursday, November 24, 2011

Data cleaning or cherry-picking?

Sometimes there's a fine line between data cleaning and cherry-picking your data.

My new favorite example of this is based on something Nassim Nicholas Taleb said at a talk at Penn (starting at 32 minutes in): that 92% of all kurtosis for silver in the last 40 years of trading could be traced to a single day; 83% of stock market kurtosis could also be traced to one day in 40 years.

One day in forty years is about 1/14,600 of all data. Such a disproportionate effect might lead some "outlier hunters" to discard that one data point. After all, there are many data butchers (not scientists if they do this) who create arbitrary rules for outlier detection (say, more than four standard deviations away from the mean) and use them without thinking.

In the NNT case, however, that would be counterproductive: the whole point of measuring kurtosis (or, in his argument, the problem that kurtosis is not measurable in any practical way) is to hedge against risk correctly. Underestimating kurtosis will create ineffective hedges, so disposing of the "outlier" will undermine the whole point of the estimation.

In a recent research project I removed one data point from the analysis, deeming it an outlier. But I didn't do it because it was four standard deviations from the mean alone. I found it because it did show an aggregate behavior that was five standard deviations higher than the mean. Then I examined the disaggregate data and confirmed that this was anomalous behavior: the experimental subject had clicked several times on links and immediately clicked back, not even looking at the linked page. This temporally disaggregate behavior, not the aggregate measure of total clicks, was the reason why I deemed the datum an outlier, and excluded it from analysis.

Data cleaning is an important step in data analysis. We should take care to ensure that it's done correctly.

My new favorite example of this is based on something Nassim Nicholas Taleb said at a talk at Penn (starting at 32 minutes in): that 92% of all kurtosis for silver in the last 40 years of trading could be traced to a single day; 83% of stock market kurtosis could also be traced to one day in 40 years.

One day in forty years is about 1/14,600 of all data. Such a disproportionate effect might lead some "outlier hunters" to discard that one data point. After all, there are many data butchers (not scientists if they do this) who create arbitrary rules for outlier detection (say, more than four standard deviations away from the mean) and use them without thinking.

In the NNT case, however, that would be counterproductive: the whole point of measuring kurtosis (or, in his argument, the problem that kurtosis is not measurable in any practical way) is to hedge against risk correctly. Underestimating kurtosis will create ineffective hedges, so disposing of the "outlier" will undermine the whole point of the estimation.

In a recent research project I removed one data point from the analysis, deeming it an outlier. But I didn't do it because it was four standard deviations from the mean alone. I found it because it did show an aggregate behavior that was five standard deviations higher than the mean. Then I examined the disaggregate data and confirmed that this was anomalous behavior: the experimental subject had clicked several times on links and immediately clicked back, not even looking at the linked page. This temporally disaggregate behavior, not the aggregate measure of total clicks, was the reason why I deemed the datum an outlier, and excluded it from analysis.

Data cleaning is an important step in data analysis. We should take care to ensure that it's done correctly.

Sunday, November 13, 2011

Vanity Fair bungles probability example

There's an interesting article about Danny Kahneman in Vanity Fair, written by Michael Lewis. Kahneman's book Thinking: Fast And Slow is an interesting review of the state of decision psychology and well worth reading, as it the Vanity Fair article.

But the quiz attached to that article is an example of how not to popularize technical content.

This example, question 2, is wrong:

$p(math|eng) = 0.5$; half the engineers have math as a hobby.

$p(math|law) = 0.001$; one in a thousand lawyers has math as a hobby.

Then the posterior probabilities (once the description is known) are given by

I understand the representativeness heuristic, which mistakes $p(math|eng)/p(math|law)$ for $p(eng|math)/p(law|math)$, ignoring the base rates, but there's no reason to give up the inference process if some data in the description is actually informative.

-- -- -- --

* This example shows the elucidative power of working through some numbers. One might be tempted to say "ok, there's some updating, but it will probably still fall under the 10-40pct category" or "you may get large numbers with a disproportionate example like one-half of the engineers and one-in-a-thousand lawyers, but that's just an extreme case." Once we get some numbers down, these two arguments fail miserably.

Numbers are like examples, personas, and prototypes: they force assumptions and definitions out in the open.

But the quiz attached to that article is an example of how not to popularize technical content.

This example, question 2, is wrong:

A team of psychologists performed personality tests on 100 professionals, of which 30 were engineers and 70 were lawyers. Brief descriptions were written for each subject. The following is a sample of one of the resulting descriptions:

Jack is a 45-year-old man. He is married and has four children. He is generally conservative, careful, and ambitious. He shows no interest in political and social issues and spends most of his free time on his many hobbies, which include home carpentry, sailing, and mathematics.

What is the probability that Jack is one of the 30 engineers?No. Most people have knowledge beyond what is in the description; so, starting from the appropriate prior probabilities, $p(law) = 0.7$ and $p(eng) = 0.3$, they update them with the fact that engineers like math more than lawyers, $p(math|eng) >> p(math|law)$. For illustration consider

A. 10–40 percent

B. 40–60 percent

C. 60–80 percent

D. 80–100 percent

If you answered anything but A (the correct response being precisely 30 percent), you have fallen victim to the representativeness heuristic again, despite having just read about it.

$p(math|eng) = 0.5$; half the engineers have math as a hobby.

$p(math|law) = 0.001$; one in a thousand lawyers has math as a hobby.

Then the posterior probabilities (once the description is known) are given by

$p(eng|math) = \frac{ p(math|eng) \times p(eng)}{p(math)}$

$p(law|math) = \frac{ p(math|law) \times p(law)}{p(math)}$with $p(math) = p(math|eng) \times p(eng) + p(math|law) \times p(law)$. In other words, with the conditional probabilities above,

$p(eng|math) = 0.995$

$p(law|math) = 0.005$

Note that even if engineers as a rule don't like math, only a small minority does, the probability is still much higher than 0.30 as long as the minority of engineers is larger than the minority of lawyers*:

Yes, that last case is a two-to-one ratio of engineers who like math to lawyers who like math; and it still falls out of the 10-40pct category.$p(math|eng) = 0.25$ implies $p(eng|math) = 0.991$

$p(math|eng) = 0.10$ implies $p(eng|math) = 0.977$

$p(math|eng) = 0.05$ implies $p(eng|math) = 0.955$

$p(math|eng) = 0.01$ implies $p(eng|math) = 0.811$

$p(math|eng) = 0.005$ implies $p(eng|math) = 0.682$

$p(math|eng) = 0.002$ implies $p(eng|math) = 0.462$

I understand the representativeness heuristic, which mistakes $p(math|eng)/p(math|law)$ for $p(eng|math)/p(law|math)$, ignoring the base rates, but there's no reason to give up the inference process if some data in the description is actually informative.

-- -- -- --

* This example shows the elucidative power of working through some numbers. One might be tempted to say "ok, there's some updating, but it will probably still fall under the 10-40pct category" or "you may get large numbers with a disproportionate example like one-half of the engineers and one-in-a-thousand lawyers, but that's just an extreme case." Once we get some numbers down, these two arguments fail miserably.

Numbers are like examples, personas, and prototypes: they force assumptions and definitions out in the open.

Tuesday, November 1, 2011

Less

I found a magic word and it's "less."

On September 27, 2011, I decided to run a lifestyle experiment. Nothing radical, just a month of no non-essential purchases, the month of October 2011. These are the lessons from that experiment.

Separate need, want, and like

One of the clearest distinctions a "no non-essential purchases" experiment required me to make was the split between essential and non-essential.

Things like food, rent, utilities, gym membership, Audible, and Netflix I categorized as essential, or needs. The first three for obvious reasons, the last three because the hassle of suspending them wasn't worth the savings.

A second category of purchases under consideration was wants, things that I felt that I needed but could postpone the purchase until the end of the month. This included things like Steve Jobs's biography, for example. I just collected these in the Amazon wish list.

A third category was likes. Likes were things that I wanted to have but knew that I could easily live without them. (Jobs's biography doesn't fall into this category, as anyone who wants to discuss the new economy seriously has to read it. It's a requirement of my work, as far as I am concerned.) I placed these in the Amazon wish list as well.

Over time, some things that I perceived as needs were revealed as simply wants or even likes. And many wants ended up as likes. This means that just by delaying the decision to purchase for some time I made better decisions.

This doesn't mean that I won't buy something because I like it (I do have a large collection of music, art, photography, history, science, and science fiction books, all of which are not strictly necessary). What it means is that the decision to buy something is moderated by the preliminary categorization into these three levels of priority.

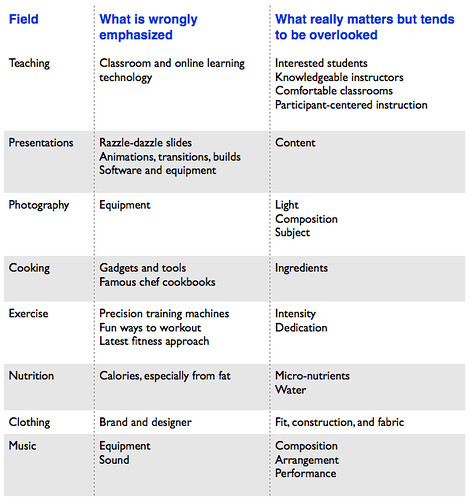

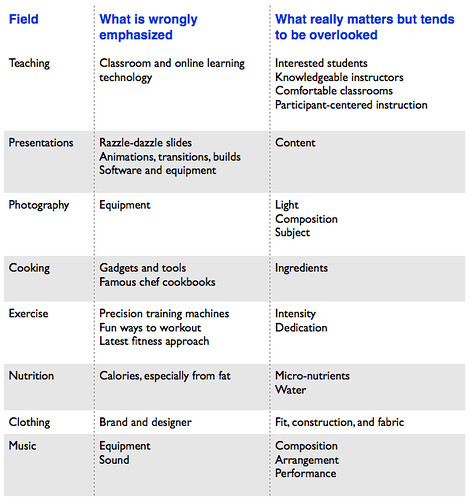

A corollary of this distinction is that it allows me to focus on what is really important in the activities that I engage in. I summarized some results in the following table (click for bigger):

One of the regularities of this table is that the entries in the middle column (things that are wrongly emphasized) tend to be things that are bought, while entries in the last column (what really matters) tend to be things that are learned or experienced.

Correct accounting focusses on time, not on nominal money

Ok, so I can figure out a way to spend less in things that are not that necessary. Why is this a source of happiness?

Because money to spend costs time and I don't even get all the money.

When I spend one hour working a challenging technical marketing problem for my own enjoyment, I get the full benefit of that one hour of work, in the happiness solving a puzzle always brings me. When I work for one hour on something that I'd rather not be doing for a payment of X dollars, I get to keep about half of those X dollars (when everything is accounted for). I wrote an illustration of this some time ago.

In essence, money to spend comes, at least partially from doing things you'd rather not do, or doing them at times when you'd rather be doing something else, or doing them at locations that you'd rather not travel to. I like the teaching and research parts of my job, but there are many other parts that I do because it's the job. I'm lucky in that I like my job; but even so I don't like all the activities it involves.

The less money I need, the fewer additional things I have to do for money. And, interestingly, the higher my price for doing those things. (If my marginal utility of money is lower, you need to pay more for me to incur the disutility of teaching that 6-9AM on-location exec-ed seminar than you'd have to pay to a alternate version of me that really wants money to buy the latest glued "designer" suit.)

Clarity of purpose, not simply frugality, is the key aspect

I'm actually quite frugal, having never acquired the costly luxury items of a wife and children, but the lessons here are not about frugality, rather about clarity of purpose.

I have a $\$$2000 17mm ultra-wide angle tilt-shift lens on my wishlist, as a want. I do want to buy it, though I don't need it for now. Once I'm convinced that the lens on the camera, rather than my skills as a photographer, is the binding constraint in my photography, I plan to buy the lens. (Given the low speed at which my photography skill is improving, this may be a non-issue. ☺)

Many of our decisions are driven by underlying identity or symbolic reasons; other decisions are driven by narrowly framed problems; some decisions are just herd behavior or influenced by information cascades that overwhelm reasonable criteria; others still are purely hedonic, in-the-moment, impulses. Clarity of purpose avoids all these. I ask:

Why am I doing this, really?

I was surprised at how many times the answer was "erm...I don't know," "isn't everybody?" or infinitely worse "to impress X." These were not reasonable criteria for a decision. (Note that this is not just about purchase decisions, it's about all sorts of little decisions one makes every day, which deplete our wallets but also our energy, time, and patience.)

Clarity of purpose is hard to achieve during normal working hours, shopping, or the multiple activities that constitute a lifestyle. Borrowing some tools designed for lifestyle marketing, I have a simple way to do a "personal lifestyle review" using the real person "me" as the persona used in lifestyle marketing analysis. Adapted from the theory, it is:

1. Create a comprehensive list of stuff (not just material possessions, but relationships, work that is pending, even persons in one's life).

2. Associate the each entry in the stuff to a sub-persona (for non-marketers this means to a part of the lifestyle that is more or less independent of the others).

3. For each sub-persona, determine the activities which have given origin to the stuff.

4. Evaluate the activities using the "clarity of purpose" criterion: why am I doing this?

5. Purge the activities that are purely symbolic and those that were adopted for hedonic reasons but do not provide the hedonic rewards associated with their cost (in money, constraints to life, time, etc), plus any functional activities that are no longer operative.

6. Guide life decisions by the activities that survive the purge. Revise criteria only by undergoing a lifestyle review process, not by spur-of-the-moment impulses.

(This procedure is offered with no guarantees whatsoever; marketers may recognize the underlying structure from lifestyle marketing frameworks with all the consumer decisions reversed.)

Less. It works for me.

A final, cautionary thought: if the ideas I wrote here were widely adopted, most economies would crash. But I don't think there's any serious risk of that.

On September 27, 2011, I decided to run a lifestyle experiment. Nothing radical, just a month of no non-essential purchases, the month of October 2011. These are the lessons from that experiment.

Separate need, want, and like

One of the clearest distinctions a "no non-essential purchases" experiment required me to make was the split between essential and non-essential.

Things like food, rent, utilities, gym membership, Audible, and Netflix I categorized as essential, or needs. The first three for obvious reasons, the last three because the hassle of suspending them wasn't worth the savings.

A second category of purchases under consideration was wants, things that I felt that I needed but could postpone the purchase until the end of the month. This included things like Steve Jobs's biography, for example. I just collected these in the Amazon wish list.

A third category was likes. Likes were things that I wanted to have but knew that I could easily live without them. (Jobs's biography doesn't fall into this category, as anyone who wants to discuss the new economy seriously has to read it. It's a requirement of my work, as far as I am concerned.) I placed these in the Amazon wish list as well.

Over time, some things that I perceived as needs were revealed as simply wants or even likes. And many wants ended up as likes. This means that just by delaying the decision to purchase for some time I made better decisions.

This doesn't mean that I won't buy something because I like it (I do have a large collection of music, art, photography, history, science, and science fiction books, all of which are not strictly necessary). What it means is that the decision to buy something is moderated by the preliminary categorization into these three levels of priority.

A corollary of this distinction is that it allows me to focus on what is really important in the activities that I engage in. I summarized some results in the following table (click for bigger):

One of the regularities of this table is that the entries in the middle column (things that are wrongly emphasized) tend to be things that are bought, while entries in the last column (what really matters) tend to be things that are learned or experienced.

Correct accounting focusses on time, not on nominal money

Ok, so I can figure out a way to spend less in things that are not that necessary. Why is this a source of happiness?

Because money to spend costs time and I don't even get all the money.

When I spend one hour working a challenging technical marketing problem for my own enjoyment, I get the full benefit of that one hour of work, in the happiness solving a puzzle always brings me. When I work for one hour on something that I'd rather not be doing for a payment of X dollars, I get to keep about half of those X dollars (when everything is accounted for). I wrote an illustration of this some time ago.

In essence, money to spend comes, at least partially from doing things you'd rather not do, or doing them at times when you'd rather be doing something else, or doing them at locations that you'd rather not travel to. I like the teaching and research parts of my job, but there are many other parts that I do because it's the job. I'm lucky in that I like my job; but even so I don't like all the activities it involves.

The less money I need, the fewer additional things I have to do for money. And, interestingly, the higher my price for doing those things. (If my marginal utility of money is lower, you need to pay more for me to incur the disutility of teaching that 6-9AM on-location exec-ed seminar than you'd have to pay to a alternate version of me that really wants money to buy the latest glued "designer" suit.)

Clarity of purpose, not simply frugality, is the key aspect

I'm actually quite frugal, having never acquired the costly luxury items of a wife and children, but the lessons here are not about frugality, rather about clarity of purpose.

I have a $\$$2000 17mm ultra-wide angle tilt-shift lens on my wishlist, as a want. I do want to buy it, though I don't need it for now. Once I'm convinced that the lens on the camera, rather than my skills as a photographer, is the binding constraint in my photography, I plan to buy the lens. (Given the low speed at which my photography skill is improving, this may be a non-issue. ☺)

Many of our decisions are driven by underlying identity or symbolic reasons; other decisions are driven by narrowly framed problems; some decisions are just herd behavior or influenced by information cascades that overwhelm reasonable criteria; others still are purely hedonic, in-the-moment, impulses. Clarity of purpose avoids all these. I ask:

Why am I doing this, really?

I was surprised at how many times the answer was "erm...I don't know," "isn't everybody?" or infinitely worse "to impress X." These were not reasonable criteria for a decision. (Note that this is not just about purchase decisions, it's about all sorts of little decisions one makes every day, which deplete our wallets but also our energy, time, and patience.)

Clarity of purpose is hard to achieve during normal working hours, shopping, or the multiple activities that constitute a lifestyle. Borrowing some tools designed for lifestyle marketing, I have a simple way to do a "personal lifestyle review" using the real person "me" as the persona used in lifestyle marketing analysis. Adapted from the theory, it is:

1. Create a comprehensive list of stuff (not just material possessions, but relationships, work that is pending, even persons in one's life).

2. Associate the each entry in the stuff to a sub-persona (for non-marketers this means to a part of the lifestyle that is more or less independent of the others).

3. For each sub-persona, determine the activities which have given origin to the stuff.

4. Evaluate the activities using the "clarity of purpose" criterion: why am I doing this?

5. Purge the activities that are purely symbolic and those that were adopted for hedonic reasons but do not provide the hedonic rewards associated with their cost (in money, constraints to life, time, etc), plus any functional activities that are no longer operative.

6. Guide life decisions by the activities that survive the purge. Revise criteria only by undergoing a lifestyle review process, not by spur-of-the-moment impulses.

(This procedure is offered with no guarantees whatsoever; marketers may recognize the underlying structure from lifestyle marketing frameworks with all the consumer decisions reversed.)

Less. It works for me.

A final, cautionary thought: if the ideas I wrote here were widely adopted, most economies would crash. But I don't think there's any serious risk of that.

Labels:

lifestyle

Monday, October 24, 2011

Thinking skill, subject matter expertise, and information

Good thinking depends on all three, but they have different natures.

To illustrate, I'm going to use a forecasting tool called Scenario Planning to determine my chances of dating Milla Jovovich.

First we must figure out the causal structure of the scenario. The desired event, "Milla and I live happily ever after," we denote by $M$. Using my subject matter expertise on human relationships, I postulate that $M$ depends on a conjunction of two events:

- Event $P$ is "Paul Anderson – her husband – runs away with a starlet from one of his movies"

- Event $H$ is "I pick up the pieces of Milla's broken heart"

So the scenario can be described by $P \wedge H \Rightarrow M$. And probabilistically,

$\Pr(M) = \Pr(P) \times \Pr(H).$

Now we can use information from the philandering of movie directors and the knight-in-shining-armor behavior of engineering/business geeks [in Fantasyland, where Milla and I move in the same circles] to estimate $\Pr(P) =0.2$ (those movie directors are scoundrels) and $\Pr(H)=0.1$ (there are other chivalrous nerds willing to help Milla) for a final result of $\Pr(M)=0.02$, or 2% chance.

Of course, scenario planning allows for more granularity and for sensitivity analysis.

We could decompose event $P$ further into a conjunction of two events, $S$ for "attractive starlet in Paul's movies" and $I$ for "Paul goes insane and chooses starlet over Milla." We could now determine $\Pr(P)$ from these events instead of estimating it directly at 0.2 from the marital unreliability of movie directors in general, using $\Pr(P) = \Pr(S) \times \Pr(I).$

Or, going in another direction, we could do a sensitivity analysis. Instead of assuming a single value for $\Pr(P)$ and $\Pr(H)$, we could find upper and lower bounds, say $0.1 < \Pr(P) < 0.3$ and $0.05 < \Pr(H) < 0.15$. This would mean that $0.005 < \Pr(M) < 0.045$.

Of course, if instead of the above interpretation we had

- Event $P$ is "contraction in the supply of carbon fiber"

- Event $H$ is "increase in the demand for lightweight camera tripods and monopods"

- Event $M$ is "precipitous increase in price and shortages of carbon fiber tennis rackets"

the same scenario planning would be used for logistics management of a sports retailer provisioning.

Which brings us to the three different competencies needed for scenario planning, and more generally, for thinking about something:

Thinking skill is, in this case, knowing how to use scenarios for planning. It includes knowing that the tool exists, knowing what its strengths and weaknesses are, how to compute the final probabilities, how to do sensitivity analysis, and other procedural matters. All the computations above, which don't depend on what the events mean are pure thinking skill.

Subject matter expertise is where the specific elements of the scenario and their chains of causality come from. It includes knowing what to include and what to ignore, understanding how the various events in a specific subject area are related, and understanding the meaning of the events (as opposed to just computing inferential chains like an algorithm). So knowing that movie directors tend to abandon their wives for starlets allows me to decompose the event $P$ into $S$ and $I$ in the Milla example. But only an expert in the carbon fiber market would know how to decompose $P$ when it becomes the event "contraction in the supply of carbon fiber."

Information, in this case, are the probabilities used as inputs for calculation, as long as those probabilities come from data. (Some of these, of course, could be parameters of the scenario, which would make them subject matter expertise. Also, instead of a strict implication we could have probabilistic causality.) For example, the $\Pr(P)=0.2$ could be a simple statistical count of how many directors married to fashion models leave their wives for movie starlets.

Of these three competencies, thinking skill is the most transferrable: knowing how to do the computations associated with scenario planning allows one to do them in military forecasting or in choice of dessert for dinner. It is also one that should be carefully learned and practiced in management programs but typically is not given the importance its real-world usefulness would imply.

Subject matter expertise is the hardest to acquire – and the most valuable – since it requires both acquiring knowledge and developing judgment. It is also very hard to transfer: understanding the reactions of retailers in a given area doesn't transfer easily to forecasting nuclear proliferation.

Information is problem-specific and though it may cost money it doesn't require either training (like thinking skill) or real learning (like subject matter expertise). Knowing which information to get requires both thinking skill and subject matter expertise, of course.

Getting these three competencies confused leads to hilarious (or tragic) choices of decision-maker. For example, the idea that "smart is what matters" in recruiting for specific tasks ignores the importance of subject matter expertise.*

Conversely, sometimes a real subject matter expert makes a fool of himself when he tries to opine on matters beyond his expertise, even ones that are simple. That's because he may be very successful in his area due to the expertise making up for faulty reasoning skills, but in areas where he's not an expert those faults in reasoning skill become apparent.

Let's not pillory a deceased equine by pointing out the folly of making decisions without information; on the other hand, it's important to note the idiocy of mistaking someone who is well-informed (and just that) for a clear thinker or a knowledgeable expert.

Understanding the structure of good decisions requires separating these three competencies. It's a pity so few people do.

-- -- -- --

* "Smart" is usually a misnomer: people identified as "smart" tend to be good thinkers, not necessarily those who score highly on intelligence tests. Think of intelligence as raw strength and thinking as olympic weightlifting: the first helps the second, but strength without skill is irrelevant. In fact, some intelligent people end up being poor thinkers because they use their intelligence to defend points of view that they adopted without thinking and turned out to be seriously flawed.

Note 1: This post was inspired by a discussion about thinking and forecasting with a real clear thinker and also a subject matter expert on thinking, Wharton professor Barbara Mellers.

Note 2: No, I don't believe I have a 2% chance of dating Milla Jovovich. I chose that example precisely because it's so far from reality that it will give a smile to any of my friends or students reading this.

Saturday, October 15, 2011

The costly consequences of misunderstanding cost

Apparently there's growing scarcity of some important medicines. And why wouldn't there be?

Some of these medicines are off-patent, some are price-controlled (at least in most of the world), some are bought at "negotiated" prices where one of the parties negotiating (the government) has the power to expropriate the patent from the producer. In other words, their prices are usually set at variable cost plus a small markup.

Hey, says Reggie the regulator, they're making a profit on each pill, so they should produce it anyway.

(Did you spot the error?)

(Wait for it...)

(Got it yet?)

Dear Reggie: pills are made in these things called "laboratories," that are really factories. Factories, you may be interested to know, have something called "capacity constraints," which means that using a production line for making one type of pill precludes that production line from making a different kind of pill. Manufacturers are in luck, though, because most production lines can be repurposed from one medication to another with relatively small configuration cost.