This is part of the ongoing book project, though it might be offloaded into a technical notes supplement, since it requires a bit of calculus to follow completely. Clearly this book is targeted at a mass market.

A good theory versus A-B testing to exhaustion

There's nothing more practical than a good theory.

Coupled with experimental measurement of the relevant parameters, of course.

But this is very different from the ``let's just run an experiment'' approach of many theory-less areas (or areas that have ``theories'' that don't describe the real world, but are just coordinating devices for in-group vs out-group signaling, as can be found in certain fields in academe suffering from serious Physics-envy).

Our illustration will be how to calculate how long it takes a mass to drop from height $h$, in negligible atmosphere, in a planet with gravity $g$ (not necessarily Earth).

The "A-B testing to exhaustion" approach would be: for any height $h$ that we care about, run a few, say 100, test drops; average (or otherwise process) the resulting times; and report that average. This would require a new set of test drops for each $h$, just like A-B testing (by itself) requires testing each design.

The advantage of having a working theory is that we don't need to test each $h$ or design. Instead we use experiments to calibrate estimates for important parameters (in the example, the gravity) and those parameters can then be used to solve for every situation (the different heights of the drop).

Note that if we want to interpolate across different $h$ we would need a theory to do so; simple (linear) interpolation would be wrong as the time is a non-linear function of height; the non-linearity itself would be evident from a few values of $h$, but the standard empirical generalization attempt of fitting a polynomial function would also fail. (It's a square root; naughty example, isn't it?)

Yes, it's either theory plus calibration or a never-ending series of test drops. So let's use theory.

We know, from basic kinematics that $h = g t^2/2$, so the time it takes for a mass to drop from a height $h$, given the gravity $g$ of the planet, is

\[t(h;g) = \sqrt{2h/g}.\]

So what we need, when we arrive at a given planet, is the $g$ for that planet. And that brings up the measurement problem and one big difference between frequentists and Bayesians.

Let's say we use a timed drop of 1 meter and get some data $D$, then compute an estimate $\hat{g}$ from that data. Say we dropped a mass 100 times from 1 meter and the average drop time was 1.414 seconds; we therefore estimate $\hat g = 1$ m/s$^2$ by solving the kinematic equation. (Note that this is not ``one gee,'' the gravity of Earth, which is 9.8 m/s$^2$.)

What most of us would do at this point (and what is generally done in the physical sciences, after being told to be careful about it in the first labs class one takes in the freshman year) is to plug that estimate into the formula as if it was the true value of the parameter $g$, so:

\[\hat t(h;\hat g) = \sqrt{2h/\hat g}.\]

(What the instructors for the freshman labs classes say at this point is to track the precision of the measurement instruments and not to be more certain of the calculations than that precision would justify. This is promptly forgotten by everyone, including the instructor.)

So far everything is proceeding as normal, and this is the point where the Bayesians start tut-tutting the frequentists.

When parameters really are random variables

The $\hat g$ we're plugging into the $\hat t$ formula is a function of the data $\hat g = \hat g(D)$ and the data is a set of random variables, as experimental stochastic disturbances, including those inside the measurement apparatus (the person operation the stopwatch, for example) create differences between the measured quantity and the theory.

So, if $\hat g$ is a function of random variables, it is itself a random variable, not a constant parameter.

(Well, duh!, says Fred the Frequentist, unaware of the upcoming trap.)

Now, by the same logic, if $\hat t(h;\hat g)$ is a function of a random variable, $\hat g$, then $\hat t$ is also a random variable, and if we want to compute a time for a given drop from height $h$, it has to be an estimated time, in other words, the expected value of $\hat t(h;\hat g)$,

\[E\left[\hat t(h;\hat g)\right] = \int_{-\infty}^{+\infty} \sqrt{2h/x} \, f_{\hat g}(x) \, dx\]

where $f_{\hat g}(x)$ is the probability density function for the random variable $\hat g$ evaluated at point $\hat g = x$.

(Huh... erm... says Fred the Frequentist, now aware of the trapdoor open beneath his mathematical feet.)

We can use a Taylor expansion on the function $\hat t(h;x)$ around $\hat g$ to get:

\[\sqrt{2h/x} = \sqrt{2h/\hat g} - \frac{\sqrt{h}}{\sqrt{2 \hat{g}^3}} \times (x- \hat g) + O(x^2)\]

Where $O(x^2)$ are higher-order terms that we'll ignore for simplicity. Replacing that expansion into the expected time formula, we get

\[E\left[\hat t(h;\hat g)\right] = \sqrt{2h/\hat g} - \int_{-\infty}^{+\infty} \frac{\sqrt{h}}{\sqrt{2 \hat{g}^3}} \times (x- \hat g) \, f_{\hat g}(x) \, dx \quad + \cdots\]

And when we compare that with the frequentist formula we note that the first term is the same (because it's a constant, the integral just adds up to one), but there's a bunch of other terms missing in the frequentist formula. Those terms correct for the effects of randomness in the running of experiments.

Despite the minus sign, the second term is actually positive because we integrate over the distribution for $\hat g$, which is positively skewed (with more probability mass for the $x$ below the mean of $\hat g$ than above), which means that using the naif estimate, Fred the Frequentist would underestimate the drop times.

But the main point is that Fred the Frequentist would always be wrong.

But wait, there's more!

It gets worse, much worse.

Let's go back to that measurement from $h=1$ in a planet with $g =1$.

Data points are measured with error. That error, allegedly for a variety of the reasons that statistics instructors enumerate but in reality because it's convenient, is assumed Normally distributed with mean zero and some variance. Let's call it $\epsilon_i$ for each measurement $t_i$ (all for the same test height of one meter), so that

\[t_i = t + \epsilon_i\]

where $t = \sqrt{2/g}$ is the theoretical time for a one meter drop if there were no error and we knew the true $g$; with the numbers we're using here, $t= 1.414$ seconds.

How does Fred the Frequentist usually estimate the $\hat g$ from the $t_i$? Using the kinematics formula, we know that $g = 2h/t^2$, so the "obvious" thing to do is to compute

\[\hat g = 1/N \, \sum_{i} 2h/t_{i}^2\]

where $N$ is the number of drops in an experiment, and then to treat $\hat g$ as a constant when using it in the theoretical formula (as seen above) and as a Normal distributed variable for testing and confidence interval purposes.

Oh, wait a minute.

A sum of Normal random variables is a Normal random variable. But there's a inverse square in the $\hat g$ sum: $2h/t_{i}^2$. And since $t_i = t + \epsilon_i$, the square alone is going to have both a constant $t^2 = 2$ term and two random variables: $2 t \epsilon_i = 2.828 \epsilon_i $, which is Normal, and $\epsilon_i^2$, which is not. And then there's the inverse part.

So, $\hat g$ is a random variable that is the sum of the inverses of the sum of a constant $2$, a Normal random variable, $2.828 \epsilon_i$, and a non-Normal random variable $\epsilon_i^2$. $\hat g$ is not a Normal random variable, in other words.

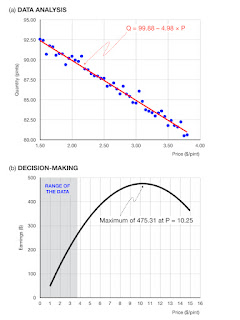

The figure shows the distribution of 10,000 estimates of $\hat g$ obtained by simulating, for each, 100 drops from 1 meter, with an $\epsilon_i$ Normally distributed with mean zero and variance 0.5, and using the simulated times to compute a $\hat g$ for that experiment. Doing that 10,000 times we see a distribution of values for the $\hat g$, characterized by two main properties: it is biased up (the true $g$ is 1, the mean of the $\hat g$ is 1.1); and the distribution is positively skewed, with a long tail on the right side, not Normal.

(The discrete plot appears negatively skewed with a right tail, which is weird; the tail is real, the apparently reversed skew is an artifact of the discreteness of the histogram bins. The mean above the median confirms the positive skewness.)

Pretty much all the testing done using standard tests (i.e. dependent on the assumption of Normality for the $\hat g$ result) is done ignoring these transformations.

This happens a lot in business, when observed variables are turned into indices, for example KPIs, and then these indices are treated, for testing and confidence interval purposes, as if they were Normal random variables. (It also happens in some fields in academe, but horse, dead, flogging...)

Okay, there's a weak response from Fred the Frequentist

The typical counter of Frequentists to Bayesians is that the latter have to make a lot of assumptions to estimate models, and some of those assumptions are not very easy to justify in terms that don't sound suspiciously like ``this particular distribution happens to make the calculus simpler.''

Still, there's no getting around the problems of using point estimates as if they were real parameters and using tests devised for Normally distributed variables on indices that are nothing like Normal random variables.

And that's on the frequentists.